Software development methodologies evolution

From Waterfall down the Punch Card to DevOps in the Cloud

Early Mainframe Era (1950s)

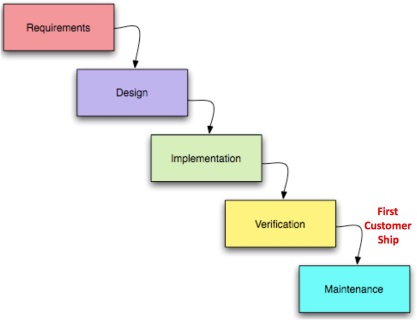

In the late 1950s, second-generation computers, transistor-based and replacing the enormous punch-card-fed vacuum-tube machines, started the commercial usage of computers. These mainframes had to be fed with punch cards that took days or weeks to “program” and which had to be entered into the computer in a small available timeslot. CPU usage was extremely expensive, hence off-line programming and extensive preparation of the program via the Waterfall method. The only available bullet had to be on target.

It was termed "waterfall" because teams complete one stage fully before moving on to the next. Requirements must be complete before functional design, functional design complete before detailed design, etc. Once a stage was finished, it was frozen in time.

It was termed "waterfall" because teams complete one stage fully before moving on to the next. Requirements must be complete before functional design, functional design complete before detailed design, etc. Once a stage was finished, it was frozen in time. Source: Agility Beyond History

Storage and Terminals

The introduction of magnetic storage in the 1950s and terminals in the late 1960s sped up software development, but the process was still based on punch-card practices with long development cycles in the Waterfall model. Solutions for unspecified or missing features (bugs) and change requests had to go through the complete Waterfall cycle again. Large system development often took years, with many projects running over budget and schedule.

The Waterfall model originates from manufacturing and construction industries, where after-the-fact changes are prohibitively costly. For punch cards it was similar—only small modifications were possible, hence the Waterfall approach. Electronically stored data can be copied and modified instantly, and CPU power scarcity was decreasing, so deviations from old practices were technically possible since the 1960s.

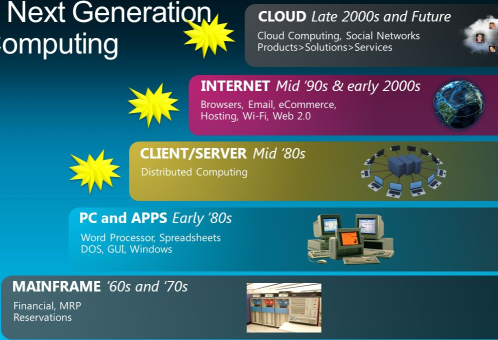

Computing over the years

Computing over the years

The PC Era

After the Mainframe Era, PCs (with their killer apps), client/server, and the Internet were introduced, but Waterfall remained the dominant development method in many cases.

In the 1990s, during the application development crisis, rapid development tools together with affordable PC processing power made Agile development practically possible. Agile became the natural choice for web applications, as users often could not describe what they wanted—they didn’t know what was possible. The only way forward was iterative development, showing technical possibilities and co-developing the first web applications.

Scrum and DevOps

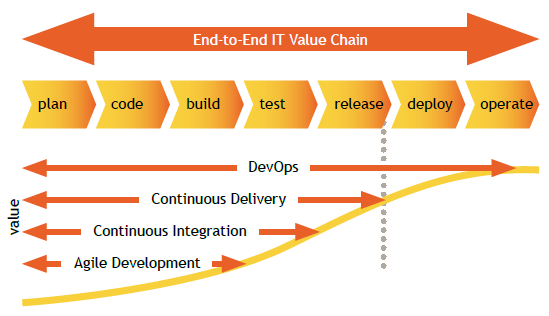

This Agile approach revealed another challenge: IT development and IT operations were far apart. A quick and dirty “developed” mock-up running on a laptop set expectations to users but was far from production-ready.

Bringing a development-ready system into production requires time and effort. Operations teams (OPS) have valid concerns—they are responsible for running, deploying, and supporting the system, and will take the first hit from unsatisfied users.

To address this tension, DevOps emerged—integrating development and operations to bring software faster and more reliably into production.

DevOps and Scrum in the IT value chain

DevOps and Scrum in the IT value chain

Read also

[Software delivery, how do the BIG guys do it?](/software delivery how do they do it/)